Like it or not, online beer ratings have been one of the big drivers of craft beer over the last 20 years. As a brewery, you don't need to cater to them, but high scores can drive sales and excitement.

I spent a good deal of time on BeerAdvocate during my first few years of beer drinking (2005-2008). Reading other's reviews was beneficial for my palate and beer vocabulary. I reviewed a couple hundred beers, which gave me confidence to "review" my homebrew for this blog. However, there were aspects of trying to track down all the top beers that made it not entirely healthy. Whether it was fear of missing out on a new release, or the thrill of the catch outweighing the enjoyment of actually drinking the beer. I find how many new beers there are now freeing, there is no way to try them all, so I don't try!

Now that Untappd is the dominant player I'll glance at reviews (especially for one of our new releases), but I don't rate. It's rare to see a review that has much insight into the beer. Even the negative ones are rarely constructive. As an aside, I find it a bit weird when people in the local beer industry rate our offerings. Generally they are kind, but it just seems strange to publicly review "competing" products.

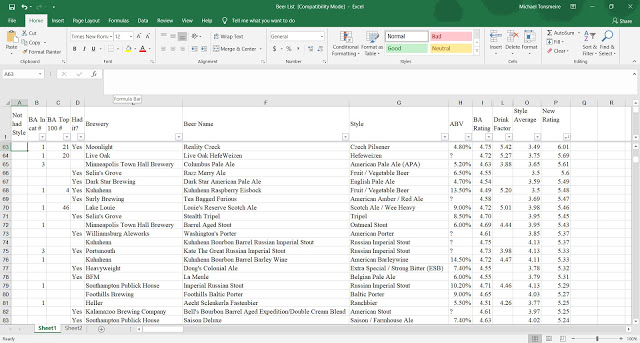

For four or five years I maintained a spreadsheet to track the beers I drank and those I wanted to try. I weighted the beers not just on their average BeerAdvocate score, but on the score relative to their style. That's to say I was more interested in trying a Czech Pilsner rated 4.2 more than a DIPA at 4.3 because Pilsners generally have lower scores. If all you drink are the top rated beers, you'll be drinking mostly the same handful of styles from a small selection of breweries. Why is that though?

Whether it is the BeerAvocate Top 100, Rate Beer's Top 50, and Untappd's Top Beers they all show a similar bias towards strong adjunct stouts, DIPAs, and fruited sours. I don't think the collective beer rater score aligns with what the average beer drinker enjoys the most or drinks regularly. It is a result of a collection of factors that are inherent to the sort of hedonistic rating system.

So what makes beers and breweries score well?

Big/Accessible Flavors

People love assertive flavors. Once you've tried a few hundred (or thousand) beers, it is difficult to get a "wow" response from malt, hops, and yeast. This is especially true in a small sample or in close proximity to other beers (e.g., tasting flight, bottle share, festival). So many of the top beers don't taste like "beer" they taste like maple, coconut, bourbon, chocolate, coffee, cherries etc. If you say there is a flavor in the beer everyone wants to taste it... looking at reviews for our Vanillafort, it is amazing how divergent the experiences are. Despite a (to my palate) huge vanilla flavor (one bean per 5 gallons), some people don't taste it.

Sweetness is naturally pleasant. It's a flavor our palates evolved to prefer over sour/bitter because it is a sign of safe calories. That said, too much can also make a beer less drinkable. I enjoy samples of pastry stouts, but most of them don't call for a second pour. Balance between sweet-bitter or sweet-sour makes a beer that calls for another sip, and a second pour.

Even the most popular IPAs have gone from dry/bitter to sweet/fruity. They are beers that are less of an acquired taste. More enjoyable to a wider spectrum of drinkers. I'm amazed how many of the contractors, delivery drivers, and other non-beer nerds who visit the brewery mention that they are now into IPAs.

If you want a high brewery average, one approach is simply to not brew styles that have low average ratings. That said, for tap room sales it can really help to have at least one "accessible" beer on the menu. For us that has always been a low-bitterness wheat beer with a little yeast character, and a fruity hop aroma. Their scores drag our average down, but it is worth it for us.

Exclusivity

The easier a beer is to obtain, the more people will try it. The problem is that you don't want everyone rating your beer. To get high scores it helps to apply a pressure that causes only people who are excited about the beer to drink (and rate it). This can take a variety of forms, but the easiest is a small production paired with a high price-point and limited distribution. You can make the world's best sour beer, but if it is on the shelf for $3 a bottle at 100 liquor stores you'll get plenty of people sampling it that hate sour beers. Even with our relatively limited availability we get reviews like "My favorite sour beer ever!" 1.5 stars... The problem with averages is that a handful of really low scores have a big impact.

I'll be interested to see how our club-exclusive bottles of sour beer rate compared to the ones available to the general public. The people who joined self-identified as fans of ours and sour beers. My old homebrewing buddy Michael Thorpe has used clubs to huge success at Afterthought Brewing (around #20 on Untappd's Top Rated Breweries). In addition to directing his limited volume towards the right consumers, clubs allow him to brew the sorts of weird/esoteric (delicious) beers that might not work on a general audience (gin barrels, buckwheat, dandelions, paw paw etc.).

As noted above some styles have higher average reviews than others. Simply not brewing low-rated styles goes a long way towards ensuring a high overall brewery average. Anytime I feel like one of our beers is underappreciated, I go look at the sub-4 average of Hill Farmstead Mary, one of my favorite beers. Afterthought recently announced a new non-sour brand, which will prevent beer styles with lower averages from "dragging down" the average for Afterthought.

I remember there being debate over the minimum serving size for a review on BeerAdvocate. I think a few ounces of a maple-bacon-bourbon imperial stout is plenty. However for session beers, can you really judge a beer that is intended to be consumed in quantity based on a sip or two? We don't do sample flights at Sapwood Cellars. We sell half-pours for half the price of full pours. Not having a flight reduces people ordering beers they won't enjoy just to fill out a paddle. It also means that more people will give a beer a real chance, drinking 7 oz gives more time for your palate to adjust and for you to get a better feel for the balance and drinkability. What kills me is seeing people review one of our sessions beers based on a free "taste."

Another option is physical distance. Most trekking to Casey, De Garde, or Hill Farmstead are excited to be going there and ready to be impressed. It helps that all three brew world-class sour beers, but I'm not sure the ratings would be quite as good if they were located in an easily-accessible urban center.

The trick to getting to the Top Beer lists is that you need a lot of reviews to bring the weighted average up close to the average review. So having a barrier, but still brewing enough beer and being a big enough draw to get tens of thousands of check-ins and ratings. Organic growth helps, starting small, and generating enough excitement to bring people from far and wide. Lines (like those at Tree House) then help to keep up the exclusivity, not many people who hate hazy IPAs are going to wait in line for an hour to buy the new release - unless it is to trade.

Shelf Stability/Control

Many of the best rated beers are bulletproof. Big stouts and sours last well even when not handled or stored properly. This means that even someone drinking a bottle months or years after release is mostly assured a good experience. Most other styles really don't store well and are at their best fresh.

Conversely, hazy IPAs are one of the most delicate styles. I think it's funny that some brewers talk about hiding flaws in a NEIPA. While you sure don't need to have perfect fermentation control to make a great hop-bomb, they are not forgiving at all when it comes to packaging and oxygen pick-up. That's partly the reason that the best regarded brewers of the style retail most of the canned product themselves. Alchemist, Trillium, Tree House, Tired Hands, Hill Farmstead, Aslin, Over Half etc. all focus on direct-to-consumer sales. That ensures the beer doesn't sit on a truck or shelf for a large amount of time before a consumer gets it. Consumers seem to be more aware than they once were (especially for these beers) that freshness matters.

Of course the margins are best when selling direct too, so any brewery that is able to sell cases out the door will. It can turn into a positive feedback loop, where the beer is purchased/consumed fresh which makes the beer drinker more likely to return. This worked well for Russian River, not bottling Pliny the Elder until there was enough demand that it won't sit on the shelf for more than a week.

Sure the actual packaging process (limiting dissolved oxygen) is important. But generally an OK job on a two-week-old can will win out over a great job on a two-month old can.

The ultimate is to have people drink draft at your brewery. That way you can control the freshness, serving temperature, glassware, atmosphere etc. That said, I notice the scores for our beers in growlers are usually higher than draft. I suspect that this is about self-selection, people who enjoyed the beer on draft are more likely to take a growler home and rate it well. It might also be a way for people to appear grateful to someone who brought a beer for them to try.

Reputation

This is one area where blind-judged beer competitions have a clear edge over general consumer ratings. When you know what you're drinking, that knowledge will change your perception. Partly it is subconscious, you give a break to a brewery that makes good beer. Or after a lot of effort to procure a bottle you don't want to feel like you wasted money/time. It can be more overt. I've had friends tell me that they'll skip entering a rating for our beer if it would be too low. I remember boosting the score of the first bottle of Cantillon St. Lamvinus I drank, it was so sour... but I didn't want to be that 22 year old who panned what people consider to be one of the best beers in the world.

I could also be cynical, but I can imagine someone buying a case of a new beer to trade and wanting to make sure they get good "value" by helping the score for the beer. Might be doubly true for a one-off beer with aging potential!

Sapwood Cellars has done pretty well in our first year. Out of more than 100 breweries in Maryland, we have the third-highest average score (4.06) on Untappd. That isn't even close to meaning that our beer is "better" than anyone below us though. In addition to being solid brewers, we're helped by our selection of styles (mostly IPAs and sours) and by selling almost all of our beer on premise. Hopefully that feeds a good reputation, which further drives scores as we continue to hone our process.

I spent a good deal of time on BeerAdvocate during my first few years of beer drinking (2005-2008). Reading other's reviews was beneficial for my palate and beer vocabulary. I reviewed a couple hundred beers, which gave me confidence to "review" my homebrew for this blog. However, there were aspects of trying to track down all the top beers that made it not entirely healthy. Whether it was fear of missing out on a new release, or the thrill of the catch outweighing the enjoyment of actually drinking the beer. I find how many new beers there are now freeing, there is no way to try them all, so I don't try!

Now that Untappd is the dominant player I'll glance at reviews (especially for one of our new releases), but I don't rate. It's rare to see a review that has much insight into the beer. Even the negative ones are rarely constructive. As an aside, I find it a bit weird when people in the local beer industry rate our offerings. Generally they are kind, but it just seems strange to publicly review "competing" products.

For four or five years I maintained a spreadsheet to track the beers I drank and those I wanted to try. I weighted the beers not just on their average BeerAdvocate score, but on the score relative to their style. That's to say I was more interested in trying a Czech Pilsner rated 4.2 more than a DIPA at 4.3 because Pilsners generally have lower scores. If all you drink are the top rated beers, you'll be drinking mostly the same handful of styles from a small selection of breweries. Why is that though?

Whether it is the BeerAvocate Top 100, Rate Beer's Top 50, and Untappd's Top Beers they all show a similar bias towards strong adjunct stouts, DIPAs, and fruited sours. I don't think the collective beer rater score aligns with what the average beer drinker enjoys the most or drinks regularly. It is a result of a collection of factors that are inherent to the sort of hedonistic rating system.

So what makes beers and breweries score well?

Big/Accessible Flavors

People love assertive flavors. Once you've tried a few hundred (or thousand) beers, it is difficult to get a "wow" response from malt, hops, and yeast. This is especially true in a small sample or in close proximity to other beers (e.g., tasting flight, bottle share, festival). So many of the top beers don't taste like "beer" they taste like maple, coconut, bourbon, chocolate, coffee, cherries etc. If you say there is a flavor in the beer everyone wants to taste it... looking at reviews for our Vanillafort, it is amazing how divergent the experiences are. Despite a (to my palate) huge vanilla flavor (one bean per 5 gallons), some people don't taste it.

Sweetness is naturally pleasant. It's a flavor our palates evolved to prefer over sour/bitter because it is a sign of safe calories. That said, too much can also make a beer less drinkable. I enjoy samples of pastry stouts, but most of them don't call for a second pour. Balance between sweet-bitter or sweet-sour makes a beer that calls for another sip, and a second pour.

Even the most popular IPAs have gone from dry/bitter to sweet/fruity. They are beers that are less of an acquired taste. More enjoyable to a wider spectrum of drinkers. I'm amazed how many of the contractors, delivery drivers, and other non-beer nerds who visit the brewery mention that they are now into IPAs.

If you want a high brewery average, one approach is simply to not brew styles that have low average ratings. That said, for tap room sales it can really help to have at least one "accessible" beer on the menu. For us that has always been a low-bitterness wheat beer with a little yeast character, and a fruity hop aroma. Their scores drag our average down, but it is worth it for us.

Exclusivity

The easier a beer is to obtain, the more people will try it. The problem is that you don't want everyone rating your beer. To get high scores it helps to apply a pressure that causes only people who are excited about the beer to drink (and rate it). This can take a variety of forms, but the easiest is a small production paired with a high price-point and limited distribution. You can make the world's best sour beer, but if it is on the shelf for $3 a bottle at 100 liquor stores you'll get plenty of people sampling it that hate sour beers. Even with our relatively limited availability we get reviews like "My favorite sour beer ever!" 1.5 stars... The problem with averages is that a handful of really low scores have a big impact.

I'll be interested to see how our club-exclusive bottles of sour beer rate compared to the ones available to the general public. The people who joined self-identified as fans of ours and sour beers. My old homebrewing buddy Michael Thorpe has used clubs to huge success at Afterthought Brewing (around #20 on Untappd's Top Rated Breweries). In addition to directing his limited volume towards the right consumers, clubs allow him to brew the sorts of weird/esoteric (delicious) beers that might not work on a general audience (gin barrels, buckwheat, dandelions, paw paw etc.).

As noted above some styles have higher average reviews than others. Simply not brewing low-rated styles goes a long way towards ensuring a high overall brewery average. Anytime I feel like one of our beers is underappreciated, I go look at the sub-4 average of Hill Farmstead Mary, one of my favorite beers. Afterthought recently announced a new non-sour brand, which will prevent beer styles with lower averages from "dragging down" the average for Afterthought.

I remember there being debate over the minimum serving size for a review on BeerAdvocate. I think a few ounces of a maple-bacon-bourbon imperial stout is plenty. However for session beers, can you really judge a beer that is intended to be consumed in quantity based on a sip or two? We don't do sample flights at Sapwood Cellars. We sell half-pours for half the price of full pours. Not having a flight reduces people ordering beers they won't enjoy just to fill out a paddle. It also means that more people will give a beer a real chance, drinking 7 oz gives more time for your palate to adjust and for you to get a better feel for the balance and drinkability. What kills me is seeing people review one of our sessions beers based on a free "taste."

Another option is physical distance. Most trekking to Casey, De Garde, or Hill Farmstead are excited to be going there and ready to be impressed. It helps that all three brew world-class sour beers, but I'm not sure the ratings would be quite as good if they were located in an easily-accessible urban center.

The trick to getting to the Top Beer lists is that you need a lot of reviews to bring the weighted average up close to the average review. So having a barrier, but still brewing enough beer and being a big enough draw to get tens of thousands of check-ins and ratings. Organic growth helps, starting small, and generating enough excitement to bring people from far and wide. Lines (like those at Tree House) then help to keep up the exclusivity, not many people who hate hazy IPAs are going to wait in line for an hour to buy the new release - unless it is to trade.

Shelf Stability/Control

Many of the best rated beers are bulletproof. Big stouts and sours last well even when not handled or stored properly. This means that even someone drinking a bottle months or years after release is mostly assured a good experience. Most other styles really don't store well and are at their best fresh.

Conversely, hazy IPAs are one of the most delicate styles. I think it's funny that some brewers talk about hiding flaws in a NEIPA. While you sure don't need to have perfect fermentation control to make a great hop-bomb, they are not forgiving at all when it comes to packaging and oxygen pick-up. That's partly the reason that the best regarded brewers of the style retail most of the canned product themselves. Alchemist, Trillium, Tree House, Tired Hands, Hill Farmstead, Aslin, Over Half etc. all focus on direct-to-consumer sales. That ensures the beer doesn't sit on a truck or shelf for a large amount of time before a consumer gets it. Consumers seem to be more aware than they once were (especially for these beers) that freshness matters.

Of course the margins are best when selling direct too, so any brewery that is able to sell cases out the door will. It can turn into a positive feedback loop, where the beer is purchased/consumed fresh which makes the beer drinker more likely to return. This worked well for Russian River, not bottling Pliny the Elder until there was enough demand that it won't sit on the shelf for more than a week.

Sure the actual packaging process (limiting dissolved oxygen) is important. But generally an OK job on a two-week-old can will win out over a great job on a two-month old can.

The ultimate is to have people drink draft at your brewery. That way you can control the freshness, serving temperature, glassware, atmosphere etc. That said, I notice the scores for our beers in growlers are usually higher than draft. I suspect that this is about self-selection, people who enjoyed the beer on draft are more likely to take a growler home and rate it well. It might also be a way for people to appear grateful to someone who brought a beer for them to try.

Reputation

This is one area where blind-judged beer competitions have a clear edge over general consumer ratings. When you know what you're drinking, that knowledge will change your perception. Partly it is subconscious, you give a break to a brewery that makes good beer. Or after a lot of effort to procure a bottle you don't want to feel like you wasted money/time. It can be more overt. I've had friends tell me that they'll skip entering a rating for our beer if it would be too low. I remember boosting the score of the first bottle of Cantillon St. Lamvinus I drank, it was so sour... but I didn't want to be that 22 year old who panned what people consider to be one of the best beers in the world.

I could also be cynical, but I can imagine someone buying a case of a new beer to trade and wanting to make sure they get good "value" by helping the score for the beer. Might be doubly true for a one-off beer with aging potential!

Sapwood Cellars has done pretty well in our first year. Out of more than 100 breweries in Maryland, we have the third-highest average score (4.06) on Untappd. That isn't even close to meaning that our beer is "better" than anyone below us though. In addition to being solid brewers, we're helped by our selection of styles (mostly IPAs and sours) and by selling almost all of our beer on premise. Hopefully that feeds a good reputation, which further drives scores as we continue to hone our process.